Explaining AI: a new frontier in law and regulation

If you can’t explain your AI’s decisions, you probably shouldn’t be using it

An increasing number of organisations in both the private and public sector are turning to AI to help automate bureaucratic processes. Sometimes to gain efficiencies, but also to create new services that were not previously possible.

Any organisation using AI in the UK or EU needs to be aware of the legal requirements for Automated Decision Making (ADM) set out in the GDPR. Public bodies in the UK have a number of relevant statutory obligations including the Public Sector Equality Duty, and must be able to explain their decisions in order to show they are fair and rational— key principles of administrative law.

This article gives a brief overview of current regulations, focussing on the GDPR, and considers how this might affect your choice of algorithm.

What is Automated Decision Making?

In the context of the GDPR, ADM is where a decision is made solely by an algorithm using information about an individual. The GDPR is only concerned with circumstances in which the decision is taken by the algorithm. The Regulations do not apply if the decision is ultimately taken by a human.

However, care is needed before concluding that the GDPR is not relevant: even when there is a “human in the loop”, it isn’t always clear they will have discretion over the final decision.

A complex machine learning model will often be a “black box” for all practical purposes: data is fed in, and it spits out an answer, but we don’t really know how it arrived at this answer. If you cannot explain at least the gist of the decision logic, it becomes difficult to question the answers given by the model. You can, of course, disagree with the answer. But only by taking the algorithm’s answer as given, calculating your own version, and then compare the two. [I use the words model and algorithm interchangeably throughout this article].

The human is not really in the loop. To override the contribution from the enigmatic black box, the human must step outside the loop and replicate the algorithm’s contribution to the decision making process.

In other words, even if human judgement is the final arbiter in a decision-making process, the human only has full discretion over the decision if the algorithmic element of the decision process can either be:

isolated and replicated by the human; and/or,

unravelled so that its inner logic can be deciphered and interrogated.

Therefore, the more complex and opaque the underlying algorithm, the more likely it is to be caught by the GDPR — even with a human in the loop.

What does GDPR say about Automated Decision Making?

Article 22 gives individuals the right not to be subject to fully automated decision making based on data about them, but only when the decision in question has a legal or “similarly significant” effect. If a decision could have such an effect, the GDPR only permits it to be made solely by an algorithm if one of the following criteria is met:

the individual gives consent;

the decision system is required by law; or

the decision system is necessary for a contract.¹

If one of these criteria is met, individuals subject to these automated decisions must be granted additional rights. The ICO gives a clear summary of how these additional rights translate into obligations for the body using the ADM. The body must both conduct a data protection impact assessment (DPIA) and:

give individuals information about the data processing — including the decision making process;

introduce simple ways for an individual to request human intervention or challenge a decision; and

carry out regular checks to make sure that the data processing systems are working as intended.

Note the second point — the individual in question must be given the option of human discretion over the decision. As discussed above, this requires that either: the logic of the decision engine can be unravelled; or, the entire contribution made by the algorithm can be replaced with human produced input.

Does GDPR cover all automated decision making?

No. If the decision making doesn’t concern an individual, and therefore isn’t based on personal data, then GDPR does not apply. For example, an algorithm to allocate resources within a business, such as how much stock to keep at different retail stores, is unlikely to be subject to GDPR rules.

Equally, Article 22 only applies when the automated decision has a legal or similarly significant effect. A legal effect is something that affects an individual’s legal rights. “Similarly significant” is a bit vague, but is intended to capture decisions which could have a significant effect on an individual’s life, such as those in relation to healthcare, eduction, recruitment, or financial circumstances. For more detail, see the EU’s guidance on automated decision making.

The decisions of public sector bodies will likely be caught because they usually have legal or significant effects. For example, this would cover almost any decision regarding social security payments, healthcare, policing, education, etc.

For the private sector things are less clear cut. Take the example of personalised marketing. This will usually be based on some kind of profiling and will almost certainly be automated. In most circumstances, this won’t have a significant effect on an individual, but there will always be exceptions. For example, personalised adverts for jobs, gambling, medical services, education — all have the potential to significantly affect an individual’s life.

Explaining AI — will it reveal its secrets?

GDPR requires that an individual subject to the decision must be able to either request human intervention, or to have sufficient information about how the decision was reached as to be able to challenge the decision. This section considers whether it is always possible to unpick an algorithmic decision to understand how it was made.

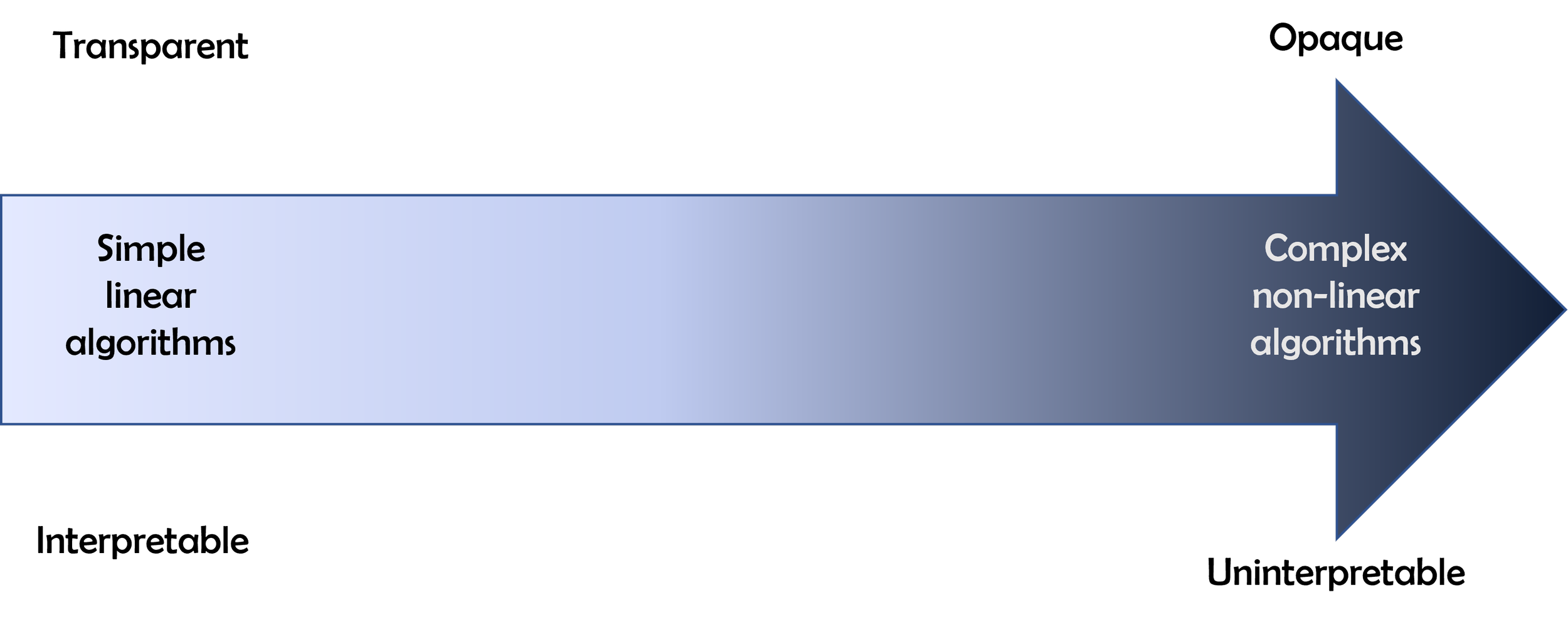

Algorithms comes in all shapes and sizes, ranging from very simple sets of rules like you find in a flow chart; through to ‘AI’ deep learning models like ChatGPT, which are both complex and almost entirely opaque. The algorithms lie on a spectrum of interpretability stretching from the transparent and easily understood through to the uninterpretable.

Spectrum of interpretability for algorithms

Simple and transparent

To help explore this issue, consider a hypothetical mortgage application decision. A simple algorithm might simply specify a maximum loan to income multiple of 3. That is, the application will be successful if the loan amount is less than 3 times the annual income of the applicant.

This is a rules based system which could be automated. However, it’s very easy to unravel and understand the decision logic. The specific threshold of 3 times income is a business decision, and one that applies to all potential customers. If your loan application is rejected, you might not like the decision, but it’s easy to understand. If you wanted to object, you could take issue with the chosen threshold or perhaps how your income has been calculated.

Complex and opaque

In the previous example, the loan to income ratio rule is based on, and justified by, a simple hypothetical model of the real world: we expect that the likelihood of loan default increases with loan to income ratio. Many of the recent advances in AI have come via algorithms where the data scientist no longer needs to hypothesise about real world relationships like these. All you need to do is provide lots of data, give the algorithm something to predict, and it will search for patterns which allow it to make this prediction. It will work out which input data to use, and how best to use it to make the most accurate prediction.

Using these new techniques, we could train a machine learning model to generate a credit score which is the likelihood of loan default based on lots of data about applicants². We might supply the algorithm with things like the applicant’s income and the amount of the loan; demographic data about the applicant; previous addresses and home ownership status; details of previous loans; spending patterns from bank statements; and so on. Assuming we have this data for past customers along with information about their loan repayments, then we can build a training dataset and feed this into a machine learning model.

There will most likely be cases where we need to help translate the data into a format that can be read by the chosen algorithm (this is in fact what data scientists spend much of their time doing). Ultimately, this means converting all the inputs into numbers, since all machine learning algorithms work through via sequence of arithmetic calculations.

Overall, there is very little human input which dictates or even suggests the gist of the logic to be adopted by the algorithm. This is precisely why these algorithms are so powerful. In areas where it is just too complicated to specify the expected patterns in advance — for example, things like image and speech recognition — these new methods work incredibly well. For these types of problems, this is the only game in town.

However, the same techniques can also be applied to problems which would previously have been solved using traditional statistical / econometric methods. From the data scientist’s perspective, the new techniques are much easier to apply, and will often give you very impressive levels of accuracy. But this power and ease of use is also the source of their Achilles’ heel: explaining decisions.

The output of the mortgage model is a credit score, and loans will only be approved if this score is below a certain threshold (i.e. the applicant is predicted to have a low likelihood of default). Superficially, a rejected applicant is in a similar position: the explanation for the decision is that their credit score was below the threshold set by the business. However, this begs the question of where that credit score came from.

Interrogating black box models

How can we explain the credit score and resulting loan decision when it comes from a black box model? There are three broad options, which are discussed in turn below:

Explore the calculations made to transform input data into outputs.

Change the inputs to the model and see how the output varies.

Assume the model does something sensible (and human-like), and check the training data for obvious errors and biases.

1. Explore the calculations

This is a fool’s errand. For any reasonably complex model, the step-by-step calculations will not offer any insight into how the final outputs are generated. This is the wrong level of abstraction. It would be like trying to figure out how a car engine works by looking at the component parts; or trying to understand human decisions by studying the movement of atoms in the brain.

Artificial neural networks, which are now commonly used for machine learning tasks, represent just such a complex model. Understanding how these work is an ongoing area of academic research. Even in specific and simple cases, we don’t yet really understand how and what these algorithms learn.

2. Explore marginal effects

This option is the closest to the black box analogy: we tinker with the inputs, and then see how the output changes. This can be a useful exercise. For example, we might find that the outputs barely change with respect to large variations in one of the inputs. As such, we can fairly safely conclude that this particular input is not important in the decision logic.

However, great care is needed with this marginal analysis. We can’t just change all the inputs at once, because we wouldn’t then know which input caused the variation in output. The traditional solution is to hold all inputs except one constant. For example, we might look at how the predicted loan default varies as we change the applicant age keeping all the other inputs the same.

The problem is that by looking at age in isolation we have lost sight of all other contextual factors: perhaps age isn’t important if the loan is a small proportion of the home value, but becomes significant as this proportion rises? And if we try to change more than one input at once, the number of combinations of different inputs soon becomes intractable. We still face the issue of the loss of context from the remaining inputs.

All these issues exist when we are modelling the real world — in both the physical and social sciences. In these cases, we fall back on a belief that there is a coherent and consistent underlying logic which explains how the data was generated. In the case of mortgage default rates, the story may be complex, but we believe that a logical explanation will exist. We generate hypotheses about these explanations, and then see how well they stack up against the observed data.

Interrogation of the model through options 1 and 2 ends up treating the model as if it were the data creating process: instead of modelling the real world, we are now modelling the model³. The problem with this approach is that we have very little reason to believe that the model’s outputs are produced by a coherent and consistent logic. We certainly should not assume that it will behave in the same way as the real world (i.e. that our credit scoring model has learned the true logic which explains mortgage default in the real world).

Crucially, there may not be any recognisable decision logic which explains the output of the model. It might simply be the result of a long set of arbitrary and independent rules. For example, in the case of mortgage default rates, the outputs might be generated by the equivalent of a list of if/then rules: “if age = a, income ratio = b, home ownership = c, etc then output = z”.

This may seem overly pessimistic. In most observed cases, and for most algorithms, the model outputs will show some consistency in response to marginal changes in inputs. The issue is that we cannot guarantee this behaviour, and therefore cannot guarantee that we can generate a useful and reliable explanation of the decision logic. Even if the model responds consistently for one range of inputs (e.g. ages between 20 and 60), we don’t know that this will behaviour will continue for all possible combinations and ranges of inputs we might use (e.g. ages below 20 or above 60).

3. Explore the training data

This exercise is definitely worth doing, but it is very limited in its power to explain individual decisions made by the algorithm. A model trained on biased data is likely to learn these biases, and will then repeat them when put to use.

The importance of undertaking such an assessment is highlighted by a recent legal case in the UK, when Court of Appeal found that the use of automated facial recognition technology by South Wales Police (SWP) was unlawful. One of the reasons was the failure of SWP to comply with the Public Sector Equality Duty and thus ensure that the system did not discriminate on grounds of race or gender.

The Court heard that SWP had not made any attempt to assess the training dataset for potential gender and racial bias. In response, SWP argued that the facial recognition software was provided by an external supplier, and access to the training dataset was not possible because it was commercially confidential. The Court noted that this may be understandable, but did not discharge the public body from its non-delegable duty.

An important question remains, however, whether assessment of the training data would really have been sufficient. The precise details of what a model learns during training depend not just on the training data, but on the nature of the algorithm, the training process and the objective which has been set (i.e. what do you want the model to learn to do). It is perfectly possible for an algorithm to become biased even when trained on what looks like an unbiased dataset.

Usually, when we look for bias in a dataset, we can only assess things crudely: for example, are there roughly even proportions of men and women; are there even proportions of different races? The trouble is that the task that we set the algorithm, such recognising faces or predicting loan defaults, is likely to vary in difficulty between individual examples. In very loose terms, model training works by rewarding the algorithm for getting better at the set task. Algorithms therefore tend to focus on the easiest ways to get better. This often means getting better at the simple examples at the expense of more difficult problems.

If the variation in difficulty is related to demographic characteristics, we can end up building an algorithm which unduly discriminates even though it was trained on a balanced dataset. For example, assessing loan default is likely to be more difficult for people with a shorter income history as there just isn’t as much information to learn from. Younger women with children are disproportionately more likely to fall into this category, and therefore may suffer indirect discrimination from an automated decision engine as a result.

Conclusions

This article has explored a number of issues relating to the regulation and the difficulty with explaining decisions made by an AI. The key conclusions are:

Modern machine learning algorithms are incredibly powerful because they can learn a decision logic purely from data. No human design input is required. However, this power comes at the expense of transparency. This learned decision logic is hidden from view, and therefore it may not be possible to provide an adequate explanation of decisions made by the algorithm.

Automated decisions which fall under the GDPR must be explainable.

Even if the GDPR does not apply, (UK) public bodies need to be able to explain their decisions, and to understand and assess their potential discriminatory implications in order to comply with the PSED.

Even when this is not a legal or regulatory issue, it’s important to question why an organisation would want to use a technology to make a decision if that decision cannot be explained. Where does responsibility for the decision lie; who should be accountable?

The simple conclusion is that transparency and explainability must factored into any decision to use AI: the most accurate, best performing algorithm will not always be the best choice.

[1] There are further protections against using special category personal data (race, ethnicity, etc). This requires explicit consent from the individual, or that the processing is necessary for reasons of “substantial public interest”.

[2] To give a concrete example of the way that such machine learning models could work and how they are trained, see this Kaggle competition. Kaggle is a website, now owned by Google, which hosts competitions for data scientists where the objective is to build the most accurate machine learning algorithms. In this competition, contestants were given a set of data about loan applicants and had to predict whether they would default. This detailed post explains the approach taken by the winning team. This makes use of a technique known as ensembles, where you take the average of the output from a number of different models. This makes for a more accurate model, but one which is fundamentally opaque.

[3] This is, in fact, precisely what some explainability techniques suggest: we train a secondary model to help us explain the underlying decision model.